Transaction Isolation Levels

In the database world when the same data is accessed by different users concurrently, ACID properties ensure that we can isolate access to the data. ACID stands for atomicity, consistency, isolation and durability

- Atomicity guarantees that all operations either complete or fail so that there would be no partially completed operations

- Consistency ensures that each transaction leaves database content in a usable state so that the next transaction gets to a version of data it can work with.

- Isolation provides a way for multiple transactions to execute concurrently without being aware of other concurrent transactions, and the last property is

- Durability, which ensures that the result of successful transactions are persisted even with system failures

Atomicity, consistency and durability are taken care of by a database "transparently", but isolation is something we can control with SQL. Let's find out what it means exactly.

When two users work with the same data, there is often a race condition. One user modifies the data that another user needs. The results would be different depending on the order of operations. How can we guarantee that users always receive consistent data?

The most simple way would be to always lock the entire data set (think the whole table) for each user, perform the operation, then return the data back to the user. In the database terms that's called serializable read. A big issue with this approach is performance. While the database works on the data-set for one user, no other operation can be completed, thus making it extremely slow and inefficient. Can we do something to improve the performance?

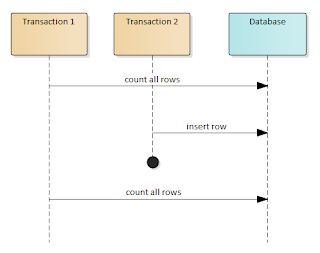

Sure we can, let's say we allow transactions to read aggregate data, including what other transactions created/deleted. In this case, transaction 1 will get different aggregate results depending on what other transactions changed:

When transaction 2 inserts a row, the subsequent counts from transaction 1 will contain an extra row. This is called a phantom read. If we restrict the requirements further to allow reading data committed by other transactions, we may end up with non-repeatable reads:

In this case, if Transaction 1 will re-read the data in row A, it will see the latest value Y, that was updated by Transaction 2, which may be different from the initial value read by Transaction 1.

The least restrictive option would be to allow reading uncommitted data. In this case we may end up have access to data in Transaction 1 that should not exist in the database in the first place:

This is known as dirty reads. In case we use no transactions a lost update may occur. If two updates modify the same row, only the last commit will win.

To control these anomalies, SQL defines different isolation levels. As we loosen restrictions on the isolation levels, we end up improving performance and scalability at the expense of more data anomalies:

- Serializable

(highest) isolation ensures that transactions appear as if they run one

after another with no concurrency. This is usually implemented by

table-level locking, causing serious performance impacts.

- Repeatable-read isolation only allows phantom reads and guarantees that there are no lost updates, dirty reads or non-repeatable reads.

- Read-commited isolation allows phantom and non-repeatable reads, but ensures that there are no lost updates and dirty reads

- Read-uncommited

isolation allows reading data only ensures that lost updates are

prevented. Transactions are free to read any data that other

transactions created or updated.

How would you pick the right level? While this is usually application dependent, there are a few rules of thumb:

- Repeatable read is usually a good starting point, as long as phantom reads are taken care of or determined not to be an issue

- If more concurrency is required, read-committed isolation level is a good idea, as long as data is locked appropriately.

- Using serializable and read-uncommited isolation levels is not the best idea. Serializable provides too high of performance penalty and phantom reads are rarely a problem. Read-uncommited on the other hand is extremely unsafe and may impact other transactions.